Evidence of meat-eating among our distant human ancestors is hard to find and even harder to interpret, but researchers are beginning to piece together a coherent picture.

Over the course of six million years of human evolution, brain size increased 300 percent. Our huge, complex brains can store and process decades worth of information in split seconds, solve multifactorial problems, and create abstract ideas and images. This would have been a big advantage to early humans as they were spreading out across Africa and into Asia just under two million years ago, encountering unfamiliar habitats, novel carnivore competitors, and different prey animals. Yet our large brains come at a cost, making childbirth more difficult and painful for human mothers than for our nearest evolutionary kin. Modern human brains take up only about 2 percent of our body weight as adults, but use about 20 percent of our energy. Such a disproportionate use of resources calls for investigation. For years, my colleagues and I have explored the idea that meat-eating may have played a role in this unusual aspect of human biology.

In 1995, Leslie Aiello and Peter Wheeler developed theexpensive tissue hypothesis to explain how our huge brains evolved without bringing about a tremendous increase in our rate of metabolism. Aiello, then of University College London, and Wheeler, then of Liverpool John Moores University, proposed that the energetic requirements of a large brain may have been offset by a reduction in the size of the liver and gastrointestinal tract; these organs, like the brain, have metabolically expensive tissues. Because gut size is correlated with diet, and small guts necessitate a diet focused on high-quality food that is easy to digest, Aiello and Wheeler reasoned that the nutritionally dense muscle mass of other animals was the key food that allowed the evolution of our large brains. Without the abundance of calories afforded by meat-eating, they maintain, the human brain simply could not have evolved to its current form.

Although the modern “paleodiet” movement often claims that our ancestors ate large amounts of meat, we still don’t know the proportion of meat in the diet of any early human species, nor how frequently meat was eaten. Modern hunter-gatherers have incredibly varied diets, some of which include fairly high amounts of meat, but many of which don’t. Still, we do know that meat-eating was one of the most pivotal changes in our ancestors’ diets and that it led to many of the physical, behavioral, and ecological changes that make us uniquely human.

Our Omnivorous Ancestors

The diet of our earliest ancestors, who lived about six million years ago in Africa, was probably much like that of chimpanzees, our closest living primate cousins, who generally inhabit forest and wet savanna environments in equatorial Africa. Chimpanzees mainly eat fruit and other plant parts such as leaves, flowers, and bark, along with nuts and insects. Meat from the occasional animal forms only about 3 percent of the average chimpanzee’s diet. In 2009, Claudio Tennie, of the Max Planck Institute for Evolutionary Anthropology, and his colleagues developed a hypothesis that offered a nutritional perspective on the group hunting they had observed in the chimpanzees in Gombe National Park, in Tanzania. According to this hypothesis, the micronutrients gained from meat are so important that even small scraps of meat are worth the very high energy expenditure that cooperative hunting entails. Important components of meat include not only vitamins A and K, calcium, sodium, and potassium, but also iron, zinc, vitamin B6, and vitamin B12; the latter, although necessary for a balanced primate diet, is present only in small quantities in plants. In addition, macronutrients such as fat and protein, hard to come by in the environments where chimpanzees live, may be important dietary components of meat-eating.

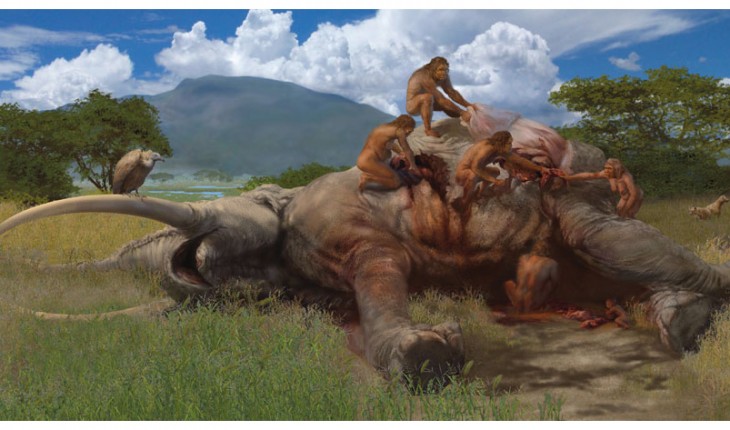

The fossil record offers evidence that meat-eating by humans differs from chimpanzees’ meat-eating in four crucial ways. First, even the earliest evidence of meat-eating indicates that early humans were consuming not only small animals but also animals many times larger than their own body size, such as elephants, rhinos, buffalo, and giraffes, whereas chimpanzees only hunt animals much smaller than themselves. Second, early humans generally used tools when they procured and processed meat. (Of course, meat-eating by human ancestors could have taken place before early humans developed the ability to procure meat by means of tools —but so far no one has determined whether the fossil record would show any evidence of it or what the evidence would look like.) Third, as we will see later, it’s likely that much of the first meat eaten by early humans came not from hunting but from scavenging; by contrast, observations of chimpanzees scavenging are extremely rare. Fourth, like humans today, our early ancestors didn’t always eat food as soon as they encountered it. Sometimes they brought it back to a central place or home base, presumably to share with members of their social group, including unrelated adults. This behavior, the delaying of food consumption, is not observed in chimpanzees, and it holds important implications for how these early humans interacted with one another socially.

Hunters or Scavengers?

The investigation of early human meat-eating in Africa began in 1925, with the earliest discovery of human fossils there. Raymond Dart, a professor of anatomy at the University of the Witwatersrand in Johannesburg, South Africa, named a new early human species Australopithecus africanus (meaning “southern ape from Africa”) after a small fossil skull from the site of Taung. The skull has since been identified as that of a three-year-old child who died about 2.8 million years ago. In other fossils at the same site Dart saw evidence of meat-eating, such as baboon skulls bearing signs of fracture and removal of the brain case prior to fossilization, with v-shaped marks on the broken edges and small puncture marks in the skull vault. He concluded the Taung child had belonged to a predatory, cave-dwelling species he described as “an animal-hunting, flesh-eating, shell-cracking and bone-breaking ape” and “a practised and skilful wielder of lethal weapons of the chase.” The concept of the killer ape was born.

Also put forth as evidence for early human hunting are patterns in the types of animal bones found in the fossil record at early human sites in East and South Africa; such patterns are called skeletal part profiles. The bulk of the data came from the well-known site of Olduvai Gorge in Tanzania, excavated by the famous duo Mary and Louis Leakey, mainly in the 1950s. Three decades later Henry Bunn, an anthropologist at the University of Wisconsin-Madison, studied the animal fossils from 1.8-million-year-old sediments at the site known as FLK Zinj (after a fossil found there that had at one time been designatedZinjanthropus). He inferred from the abundance of meat- and marrow-rich limb bones that early humans had had first choice among the parts of these animal carcasses by virtue of having hunted them.

This interpretation was supported by simple stone tools the Leakeys had found in the same deposits. The Oldowan technology (named for these kinds of tools, found at Olduvai Gorge) includes sharp stone knives, or flakes; cores from which those flakes were struck; and fist-sized rounded hammerstones used to strike the flakes from the cores. These seemingly basic tools allowed early humans to gain access to a much broader array of foods. The sharp flakes could be used to slice meat from bones or to whittle sticks to dig for underground roots or water; the cores and hammerstones could be used to process plants and bash open bones to get access to the fat-rich marrow and brains inside.

A problem came up as early as 1957, however, when Sherwood Washburn, of the University of California, Berkeley, reported on carnivore kills he observed in Wankie Game Reserve in (then) Rhodesia. He noticed that the parts of skeletons most often remaining more or less intact after carnivores had eaten their fill were skulls and lower jaws, the least edible parts of the animals. According to Washburn, the meat-bearing bones, often broken up, that Dart had found in australopithecine deposits must have been brought there by some other animal and not the australopithecines—perhaps hyenas, which he and others had observed accumulating such bones around their dens.

In 1981, C. K. “Bob” Brain published a seminal book titled The Hunters or the Hunted?, which came to the same conclusions. After studying a large collection of goat bones in the central Namib desert that had been discarded by modern people and then chewed by dogs, Brain hypothesized that the skeletal part profiles could best be attributed to the durability of those particular bones themselves, rather than to selection by early human hunters. Brain applied a similar explanation to fossil assemblages from early human sites in the Transvaal region of South Africa. In his view, a number of nonhuman agencies that could cause accumulations in modern caves had done so in these fossil assemblages: fluvial activity, carnivores such as hyenas and leopards, porcupines, owls, and natural deaths. It was clear that more evidence was needed to evaluate whether early human activities were in fact responsible for the accumulation of animal fossils at prehistoric sites.

In that same year, 1981, incontrovertible evidence of early human butchery came to light, in the form of linear striations on fossils which were identified as cut marks made by the stone tools found in abundance at the FLK Zinj site. Bunn and, in a separate study, Rick Potts from the Smithsonian Institution and Pat Shipman from Pennsylvania State University, used a scanning electron microscope to demonstrate that these marks were different from the shallow, chaotically oriented scratches seen on some fossils. This sedimentary abrasionis thought to be the result of sand grains rubbing against the bones as they tumbled around in rivers or were trampled on by animals. The cut marks, by contrast, were shorter, deeper, and often located on the parts of bones where muscles attach. They seemed to show conclusively that early humans were proficient hunters of the extinct antelopes, zebras, and similar animals found alongside the early human fossils and stone tools in the 1.8-million-year-old deposits.

Although more than one kind of early human had been found at Olduvai, for several decades the thousands of Oldowan stone tools and hundreds of cut-marked bones were attributed exclusively to the fossils of our genus, Homo. With a new report of a jaw from the site of Ledi Geraru in the Afar region of Ethiopia, the fossil evidence of our genus now extends back 2.8 million years. Until recently, Ethiopia has also yielded the earliest evidence of stone tools and cut marks on animal fossils (from 2.5 to 2.6 million years ago) at the sites of Bouri and Gona, and the earliest Oldowan stone tools from Gona, dated to 2.5 million years ago. Altogether, a tidy package of archaeological evidence of the earliest butchery and stone tools—in other words, carnivory—seems to have emerged by at least 2.5 million years ago with the origins of our genus.

Further Back in Time

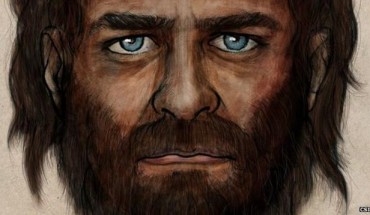

This package suddenly took on considerably greater antiquity, however, when a team headed by Zeresenay Alemseged, of the California Academy of Sciences, announced a new finding in 2010. The site of Dikika, in Ethiopia, had yielded two bones, each with multiple cut marks, from sediments dated at 3.4 million years old. This evidence pushed back the date for the earliest human meat-eating by 800,000 years—earlier than the advent of genus Homo—thereby jeopardizing the idea that using stone tools to butcher and eat large animals was unique to our genus. The researchers knew skeptics might scoff at so few cut marked bones, even with the presence of a stone chip embedded in one of the cut marks, so they subjected the fossils to rigorous investigation. They compared them with experimental collections of cut-marked bones, subjected the marks to independent “blind tests” by experts who didn’t know the age or geographic location of the fossils, and used sophisticated microscopy and spectrometry to show the antiquity of the marks. When critics noted that no stone tools were found with the cut marked fossils, the group suggested early humans may have used naturally sharp stones for butchery, and argued that meat consumption and stone tool usemay have predated stone tool manufacture. These cut marks are the same age as the fossils of Australopithecus afarensis found in nearby deposits at Dikika. The game-changing conclusion of this evidence was that Homo had not been the only meat-eater among human ancestors; Australopithecus had also been capable of butchering and eating animals, if only on rare occasions.

Additional support for this claim came to light last year, when Sonia Harmand of Stony Brook University and her team reported that they had found 149 stone tools dating back to 3.3 million years ago from the site of Lomekwi, Kenya. The flakes they found showed clear signs of having been intentionally removed from the cores; according to the research team, they could not have resulted from accidental rock fracture. In one remarkable instance, researchers found both a flake and the core from which it had been struck; the two still fit together perfectly. The cores found at Lomekwi, however, are much larger and heavier than typical Oldowan ones and would have been difficult to flake by means of the technique that had probably been used to make Oldowan tools. The Stony Brook team concluded these tools were made mainly by early humans raising large rocks above their heads and bringing them down onto a hard surface to fracture them, much the way chimpanzees and other primates today crack open nuts with stones. This newly identified type of stone tool tradition, now known as Lomekwian, is hundreds of thousands of years older than any Homo fossils; the only early human species found in the West Turkana region at this time is Kenyanthropus platyops, known from only a few fossils but broadly similar in anatomy to australopithecines.

The earliest evidence of what we might call persistent carnivory—part of the intensification and expansion of meat-eating—comes from Kanjera South, a research site in Kenya run by Rick Potts of the Smithsonian Institution and Tom Plummer of Queens College at the City University of New York. In 2013, Joe Ferraro of Baylor University, Potts, Plummer, and I and other colleagues announced that we had documented this evidence on more than 3,700 animal fossils and 2,900 stone tools in three separate layers going back about two million years. The archaeological and fossil evidence includes dozens of bones bearing cut marks and percussion marks. The indications are clear that early humans, most likely Homo habilis or Homo erectus (given the time period), processed more than 50 animal carcasses during repeated visits to the same location over hundreds to thousands of years. Most of the carcasses were fairly complete small (goat-sized) antelopes, along with some parts of larger (reindeer-sized) antelopes.

Yet the Oldowan stone tools found alongside butchered bones at Kanjera and dozens of other sites in Africa do not seem suitable for hunting; these tools were most likely used for cutting and pounding, and Oldowan technology did not include spears or arrowheads. If early humans weren’t hunting the animals they were eating, how did they get access to them?

Some years before our finds at Kanjera, Rob Blumenschine, then of Rutgers University, had studied the leftovers of kills eaten by large carnivores in the Serengeti and Ngorongoro and proposed that early humans could have scavenged flesh scraps and marrow from these kills. Other, less common opportunities for scavenging could have included animals that died by drowning in rivers or from diseases or other natural causes. Between one- and two-million years ago the large carnivore communities of the African savanna consisted not only of lions, hyenas, leopards, cheetahs, and wild dogs, as we see today, but also at least three species of saber-toothed cats, including one that was significantly larger than the largest male African lions. These cats may have hunted larger prey, leaving even more leftovers for early humans to scavenge.

Early humans might have stolen prime dinner fare from these formidable opponents in a couple of different ways. One way would have been to confront carnivores as they were in the midst of eating their prey and somehow chase them off. The early humans could have accomplished this by throwing stones or sticks, rushing the predators in a big group, waving their arms and making lots of noise, or even ambushing them. This presumably would have yielded large portions of meat, especially from larger prey animals. In another, more passive approach, early humans could have waited until the coast was clear and the carnivores had left the area to safely move in and take what was left over. This seems a reasonable strategy, but it leaves open the question of whether such passive scavenging have been worth an early human’s time and energy.

Rewards of Scavenging

I decided to pursue this question by documenting the resources left over from carnivore kills in a different African ecosystem from Blumenschine’s and others’ studies in Tanzania, because those had taken place in areas of some of the highest carnivore competition on Earth today. In order to model what scavenging opportunities may have been like at times in the past when the carnivore community was dominated by felids (big cats and their relatives, including sabertooths), as has been indicated in some areas where early humans butchered animals, I went to a private game reserve in Kenya that is now called Ol Pejeta Conservancy. There, lions were common but hyenas were rare. I spent about seven months simulating passive scavenging by waiting until the carnivores had eaten their fill and moved off, and then documenting how much meat and marrow was left on carcasses. It turns out there was a lot! In my sample of lion kills of larger animals, mainly zebras, I found that 95 percent of bones were abandoned with at least some flesh remaining on them, and over 50 percent had significant amounts of meat left. Most of the other bones had scraps; hardly any bones were totally defleshed. The average zebra hindlimb, for example, contains almost 23 kilograms of meat, so even when 90 percent of it has been consumed, it could still yield up 2.28 kilograms of meat—and that’s only from one hindlimb. An entire zebra carcass could yield almost 15 kilograms of meat in scraps of various sizes. Using an estimate of four calories per gram of flesh, this would provide more than 6,000 calories from a zebra carcass. That’s almost 11 Big Macs—enough for the entire daily caloric requirements of about three male Homo erectus if each individual required approximately 2,090 to 2,290 calories per day, as has been previously estimated.

With a slight change of tactics, early humans may have scavenged from another carnivore as well. Whereas lions, hyenas, and wild dogs all live in social groups, have high intragroup competition when eating their prey, and spend most or all of their time on the ground, leopards behave differently. Instead they are solitary, and in some areas (probably to avoid competition with larger predators) they hoist their kill into trees and store them there for a few days, returning periodically to eat them. In the early 1990s, Blumenschine and his then-graduate student John Cavallo postulated that for early human species that retained tree-climbing abilities, such as australopithecines and probablyHomo habilis, scavenging from these kills would have been a relatively low-risk proposition. It’s true that leopard prey tends to be smaller than that of lions and hyenas, and the extent of meat available from tree-stored leopard kills has yet to be well-documented (although I observed quite a bit of meat left on my few leopard samples from Ol Pejeta Conservancy); nevertheless, I suspect this may have been part of the overall early human scavenging regime.

Not only the meat on bones but the marrow inside them would have been an important source of nutrition for early humans. It was Blumenschine, this time with then-graduate student Marie Selvaggio, who in 1988 first recognized percussion marks on animal fossils: pits and striations left from bashing bones open with baseball-sized hammerstones to gain access to marrow. Blumenschine, together with then-graduate student Cregg Madrigal, further noted in 1993 that the skeletal part profiles Bunn claimed were indications of access to the meatiest bones at FLK Zinj also reflected the bones that contained the most fat-rich marrow. If, as it seems, the early humans at sites such as FLK Zinj had access mainly to bones that had already been stripped of most of their meat by larger carnivores, the calorie-rich marrow in these bones may still have been available to creatures ingenious enough get to it. This behavior would fall in line with what we have documented at Kanjera South, where early humans transported not only limb bones but also the isolated remains of the heads of larger prey animals to the archaeological site before breaking them open and consuming the brains, taking advantage of another resource that even the largest African carnivores were unable to exploit.

Not only the meat on bones but the marrow inside them would have been an important source of nutrition for early humans. It was Blumenschine, this time with then-graduate student Marie Selvaggio, who in 1988 first recognized percussion marks on animal fossils: pits and striations left from bashing bones open with baseball-sized hammerstones to gain access to marrow. Blumenschine, together with then-graduate student Cregg Madrigal, further noted in 1993 that the skeletal part profiles Bunn claimed were indications of access to the meatiest bones at FLK Zinj also reflected the bones that contained the most fat-rich marrow. If, as it seems, the early humans at sites such as FLK Zinj had access mainly to bones that had already been stripped of most of their meat by larger carnivores, the calorie-rich marrow in these bones may still have been available to creatures ingenious enough get to it. This behavior would fall in line with what we have documented at Kanjera South, where early humans transported not only limb bones but also the isolated remains of the heads of larger prey animals to the archaeological site before breaking them open and consuming the brains, taking advantage of another resource that even the largest African carnivores were unable to exploit.

We think that early humans at Kanjera probably had early access to small animals, such as goat-sized gazelles, two million years ago. The ancient butchery marks on these smaller animals, often juveniles, are mostly on the bones from which meat is eaten early in the typical sequence of consumption by wild carnivores (as was observed by Blumenschine and confirmed by my own observations). They were probably not scavenged; if carnivores had fed on them first, very little would have been left of these bones at all. Whether the animals were deliberately hunted, and by what means, we still don’t know: Maybe the early humans were hiding in trees or behind bushes and throwing rocks to dispatch them. By contrast, we think the larger animals at Kanjera were probably scavenged, because they display butchery marks on the bones that are usually eaten toward the end of the carnivore consumption sequence.

At some point, though, there must have been a shift in the ways early humans obtained meat, because the fossil record clearly shows that our ancestors were getting access to the best parts of larger animals by at least 1.5 million years ago. In an article published in 2008, I examined butchery patterns on more than 6,000 animal bones from three sites at Koobi Fora, Kenya, that date back to that period. The early humans there (probably Homo erectus)butchered many different bones from animals both large and small; at least nine different animals had been transported to each site for consumption. The result was more than 300 bones that showed signs of butchering, including a number of the choicest bones—those from which the meat is usually eaten first by carnivores.

The presence of numerous cut marks and percussion marks, along with very few carnivore tooth marks, makes it clear that early humans were the ones processing the meatier parts of the carcasses; if carnivores were getting any access to them at all, it was probably rare, and only after the early humans had finished with them. On one limb bone, a carnivore tooth mark was found directly on top of a cut mark, indicating the early human was there first. The early humans were not choosing these parts to the exclusion of others (hence the presence of smaller, less meaty bones as well), so it appears they tended to extract all the resources they could, including marrow. The thorough processing suggests, in fact, that early humans were able to control specific places on the landscape where they could carry out this task.

As for the tooth marks from several species of large mammalian carnivores and crocodiles on some of the butchered bones from various archaeological sites in Africa, such marks constitute unequivocal evidence that our meat-eating ancestors were directly competing with carnivores for prey carcasses. It is also clear that at least some of the time these encounters did not end well for them; several early human fossils bear tooth marks from predators that presumably caused their demise. In a striking example of predation upon human ancestors, a 1995 paper by Lee Berger and Ron Clarke of the University of the Witwatersrand describes evidence of an eagle attack that can be seen on the skull of the Taung child. It’s both sad and ironic that this small child, whose fossilized skull had inspired Dart’s vision of our murderous ancestors, turned out to be the lowly meal of a large bird of prey.

When Cooking Became Crucial

For a long time, it was assumed that all of the meat, marrow, and brains in the early human diet came from terrestrial mammals. After studying patterns in fatty acid composition of aquatic food, however, Josephine Joordens of Leiden University and her colleagues proposed in 2014 that eating fatty fish could have significantly increased the availability of certain long-chain polyunsaturated fatty acids (LC-PUFA), which helped to support the initial moderate increase in brain size of early humans about 2 million years ago.

Four years earlier, David Braun of the George Washington University and his colleagues announced that they had discovered the earliest evidence of human butchery of aquatic animals. At a 1.95-million-year-old site in Koobi Fora, Kenya, they found evidence that early humans were butchering turtles, crocodiles, and fish, along with land-dwelling animals. Aquatic animals are rich in nutrients needed in human brain growth, such as DHA (docosahexaenoic acid), one of the most abundant LC-PUFAs in our brains.

It’s around this time that we see in the fossil record (based mainly on rib and pelvis fossils) a reduction of the size of the gut areas in Homo erectus, the early human species credited with consistently incorporating animal foods into its diet. This species evolved a smaller, more efficient digestive tract, which likely released a constraint on energy and permitted larger brain growth, as predicted by the expensive tissue hypothesis. Yet the increase in brain size we see in the fossil record at about 2 million years ago is basically tracking body size; while absolute brain size was increasing, relative brain size was not. Maybe meat was not completely responsible—so what was?

Perhaps it was the shift from eating antelope steak tartare to barbecuing it. There are hints of human-controlled fires at a few sites dating back to between one and two million years ago in eastern and southern Africa, but the first solid evidence comes from a one-million-year-old site called Wonderwerk Cave, in South Africa. In 2012, Francesco Berna, then of Boston University, and his colleagues reported bits of ash from burnt grass, leaves, brush, and bone fragments inside the cave. Microscopic study showed that the small ash fragments are well preserved and have jagged edges, indicating that they were not first burned outside the cave and blown or washed in, as those jagged edges would have been worn away. Also, this evidence comes from about 30 meters inside the cave, where lightning could not have ignited the fire.

Soon after that, the 790,000 year old site of Gesher-Benot Ya’aqov in Israel yielded evidence of debris from ancient stone tools that had been burned by fire. Nearby to the burnt tools were concentrations of scorched seeds and six kinds of wood, including three edible plants (olive, wild barley, and wild grape), from more than a dozen early hearths. This marks the first time that early humans came back to the same location repeatedly to cook over these early campfires. Hearths are more than just primitive stoves; they can provide safety from predators, be a warm and comforting location, and serve as places to exchange information.

Cooking was unquestionably a revolution in our dietary history. Cooking makes food both physically and chemically easier to chew and digest, enabling the extraction of more energy from the same amount of food. It can also release more of some nutrients than the same foods eaten raw and can render poisonous plants palatable. Cooking would have inevitably decreased the amount of time necessary to forage for the same number of calories. In his 2009 book Catching Fire, primatologist Richard Wrangham postulates that cooking was what allowed our brains to get big. It turns out that using fossil skulls to measure brain size, we see the biggest increase in brain size in our evolutionary history right after we see the earliest evidence for cooking in the archaeological record, so he may be on to something. Modern human bodies are so adapted to cooked foods that we have difficulty reproducing while on an exclusive diet of raw foods. For example, a 1999 study found that about 30 percent of reproductive-age women on a long-term raw-food diet had partial to complete amenorrhea, which was probably related to their low body weight.

From pilfered-from-predators to processed-and-packaged, animals have been part of human diets for more than 3 million years. To fill in a more detailed picture of meat-eating among our primate ancestors, we need to find additional prehistoric sites with cut-marked fossils so that we can begin to understand how butchering—and, later, cooking—may have related to the environments in which early humans were living. Just as important are modern-day experiments in butchering with stone tools, which help us better interpret the cut and percussion marks on animal fossils. My own next steps include finding more butchery marked fossils in the field, studying such fossils that already exist in museum collections, and helping to design and carry out butchery experiments, all with the goal of getting to the root of our meat-eating ancestry.

Bibliography

- Aiello, L. C., and P. Wheeler. 1995. The expensive-tissue hypothesis: The brain and digestive system in human and primate evolution. Current Anthropology 36:199–221.

- Berna, F., et al. 2012. Microstratigraphic evidence of in situ fire in the Acheulean strata of Wonderwerk Cave, Northern Cape Province, South Africa. Proceedings of the National Academy of Science 109(20):E1215–E1220. doi:10.1073/pnas.1117620109

- Blumenschine, R. J. 1988. Carcass consumption sequences and the archaeological distinction of scavenging and hunting. Journal of Human Evolution 15:639–659.

- Blumenschine, R. J. 1989. Characteristics of an early hominid scavenging niche. Current Anthropology 28:383–407.

- Blumenschine, R. J., and J. A. Cavallo. 1992. Scavenging and human evolution. Scientific American 267:90–96.

- Brain, C. K. 1981. The Hunters or the Hunted? An Introduction to African Cave Taphonomy. University of Chicago Press.

- Bunn, H. T. 1981. Archaeological evidence for meat-eating by Plio-Pleistocene hominids from Koobi Fora and Olduvai Gorge. Nature 291: 574–577.

- Ferraro, J. V., et al. 2013. Earliest archaeological evidence of persistent hominin carnivory. PLoS ONE 8(4):e62174. doi:10.1371/journal.pone.0062174

- Harmand, S., et al. 2015. 3.3-million-year-old stone tools from Lomekwi 3, West Turkana, Kenya. Nature 521: 310–315. doi:10.1038/nature14464

- McPherron S. P., et al. 2010. Evidence for stone-tool-assisted consumption of animal tissues before 3.39 million years ago at Dikika, Ethiopia. Nature 466: 857–860 doi:10.1038/nature09248

- Pobiner, B., et al. 2008. New evidence for hominin carcass processing strategies at 1.5 Ma, Koobi Fora, Kenya. Journal of Human Evolution55:103–130.

- Pobiner, B. 2013. Evidence for meat-eating by early humans. Nature Education Knowledge 4(6):1.

- Pobiner, B. 2015. New actualistic data on the ecology and energetics of scavenging opportunities. Journal of Human Evolution 80: 1–16.

- Potts R., and P. Shipman. 1981. Cutmarks made by stone tools on bones from Olduvai Gorge, Tanzania. Nature 291: 577–580.

- Villmoare B., et al. 2015. Early Homo at 2.8 Ma from Ledi-Geraru, Afar, Ethiopia. Science 347: 1352-1355. DOI: 10.1126/science.aaa1343

- Wrangham, R. 2009. Catching Fire: How Cooking Made Us Human. Basic Books.